Nov 8, 2025 |

PSY 101

|

|

Nov 8, 2025 |

PSY 101

|

|

Conformity and Obedience: Yielding to Others

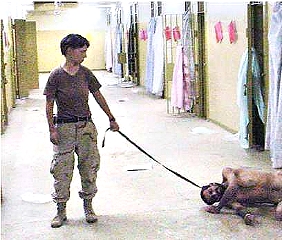

Were the soldiers involved in the tortures at Abu Ghraib prison during March 2003 in Iraq

somehow "evil" or "bad apples"? Would you or I have done what they did?

Video Excerpt from CBC The Big Picture | The Human Behaviour Experiments

If you do no know what "Abu Graib" was about, read the entry at Wikipedia

A. Conformity = People conform when they yield to real or imagined pressure from others

Question: Would I uphold my own beliefs when others around me disagree strongly? What would you do in these sorts of circumstances, e.g.,

- Members of a jury are convinced that someone is guilty of a crime, but you as a member of the jury have not been convinced by the evidence that the defendant is guilty. If you don't stick to your belief, the defendant will go to prison for many years. If you stick with your belief, the rest of the jury will scorn you and the judge will have to declare a mistrial.

- You hear the rumor from many different people that John X did something (abused someone, stole, cheated). You believe this rumor is completely inconsistent with what you know about John X. Do you pass on the rumor?

- Recall the words of Professor Dumbledore to Neville Longbottom (Harry Potter): "It takes courage to stand up to your enemies, but it takes even more courage to stand up to your friends."

Asch's Conformity Experiment: 1950s

Method

- A group of six or seven students must come up with a group judgment.

- All but one of these students are "confederates" of the experimenter, that is, they are acting together as the experimenter tells them to act. Only one of these participants is a genuine subject.

- On two preliminary judgments, the group responds accurately to the judgment task. However, on the third task, the confederates all respond incorrectly and the one genuine participant would be surprised. See below

- On the next dozen or so trials, the confederates would give incorrect answers for most of the choices.

- The variable under study was the genuine participant's willingness to agree or disagree with group's opinion

Results

- In one study of 50 college-aged males (Asch, 1955), genuine participants conformed their judgments on 37% of all trials

- 13 of 50 (26% of participants) never agreed with the confederates' wrong judgments, but 14 of 50 (28%) conformed on more than half of the wrong judgments

Subsequent Research

- Group Size: conformity increased as the group size rose from 2 to 4 and peaked at size 7

- Group Unanimity: conformity fell markedly if at least one member of the group broke with the group's judgment. Standing up to a unanimous group is much harder than one in which there is at least one dissenter.

B. Obedience

Question: Would I torture someone if I was told to do so by someone in authority?

- Obedience: a form of compliance in which people respond to the direct commands of someone in authority

- How do we make sense of Hitler's accomplices or the many soldiers and police who have harmed or tortured people in many different places?

Milgram's Obedience Studies: 1962

Video on YouTube: https://www.youtube.com/watch?v=xOYLCy5PVgM (5 min.)

Method

- A "learner" (L; confederate of Milgram) is strapped into a chair with electrical cords and attachments to deliver a "shock" to "help him learn".

- A "teacher" (T; the genuine participant) is brought to an adjoining room which contained a "shock generator" calibrated to transmit between "15" and "450 volts" of shock. An experimenter (E) instructed the "teacher" what to do.

![[Milgram

Experimental Set-up]](../psy101graphics/milgram_experiment_set-up.jpg)

- Note that shock generator was fake and in reality the "learner" received no shocks.

- However, across 30 levels, the "learner" continually made mistakes and the experimenter instructed the "teacher" to administer shocks of increasing power. As these grew stronger, the "learner" could be heard "screaming in pain", etc.

- Question: Would the participant ("teacher") obey the experimenter despite hearing and seeing signs of danger and distress?

Results

- 65% of participants delivered shocks beyond the "Danger" level to the highest level on the "shock generator" panel.

- Many protested that they were harming the learner and showed strong signs of distress (trembled, sweated, etc.). However they continued to obey the experimenter. As Prof. Alan Elms, then Milgram's research assistant, recently noted (2011): "Some subjects wept as they administered the higher-level shocks. Others smirked or giggled or laughed hysterically; still others sweated profusely or clenched their teeth or pulled their hair. But for the most part they obeyed."

- Note that this experiment could not be performed any longer in the US for ethical reasons: it had the potential of harming the participants by subjecting them to such high levels of distress.

Contemporary Critique of Milgram

Several social scientists have raised major questions about the meaning of or conclusions we can draw from Milgram's experiment. Note that it was NOT a single experiment. Rather Milgram (1974) ran 18 different variations of the design reported above. Reicher & Haslam (2011) challenge putting sole emphasis upon the variation in which 65% of the "teachers" went to 450 volts:

When the subjects sat in the same room as the learner and watched as he was shocked, however, the percentage of obedient teachers went down to 40. It fell further when the participant had to press the learner’s hand onto an electric plate to deliver the shock. And it went below 20 percent when two other “participants”—actually actors—refused to comply. Moreover, in three conditions nobody went up to 450 volts: when the learner demanded that shocks be delivered, when the authority was the victim of shocks, or when two authorities argued and gave conflicting instructions. In short, Milgram’s range of experiments revealed that seemingly small details could trigger a complete reversal of behavior—in other words, these studies are about both obedience and disobedience. Instead of only asking why people obey, we need to ask when they obey and also when they do not. (pp. 59-60)

Reicher & Haslam (2011) go on to point out a very different way of understanding what was happening:

"As psychologist Jerry Burger of Santa Clara University has observed, of [the] four instructions [given to the "teacher"] only the last is a direct order. In Obedience (1974), Milgram gives an example of one reaction to this prod:

Experimenter: You have no other choice, sir, you must go on.

Subject: If this were Russia maybe, but not in America.

(The experiment is terminated.)

In a recent partial replication of Milgram’s study, Burger [2009] found that every time this prompt was used, his subjects refused to go on. This point is critically important because it tells us that individuals are not narrowly focused on being good followers. Instead they are more focused on doing the right thing. The irony here is hard to miss. Milgram’s findings are often portrayed as showing that human beings mindlessly carry out even the most extreme orders. What the shock experiments actually show is that we stop following when we start getting ordered around. In short, whatever it is that people do when they carry out the experimenter’s bidding, they are not simply obeying orders." (p. 61)

So, possibly this experiment needs to be more critically evaluated. People may not be completely blind and willing to follow authority. Rather, in the social setting of an experiment, there may be forces such as social identification and interpersonal bonding that help to explain such behavior as well.

C. The Power of the Situation

The Stanford Prison "Experiment" (SPE, August 1971)

- The Stanford Prison Simulation (August 1971) -- Slide Show

- The Stanford Prison Experiment (2015 film) -- Trailer (2' 44") on YouTube

Philip Zimbardo (b. 1933 in The Bronx, NY, d. 2024 in San Francisco, CA). As a professor at Stanford University he designed an "experiment" to explore why prisons become so abusive and degrading locations. He selected 24 males students who had been screened to be physical healthy & psychologically stable. They were randomly divided into two groups: guards and prisoners.

Zimbardo and his colleagues explain the transformation of these students into prisoners and guards as the results of

On a quiet Sunday morning, the "prisoners" were "arrested" at their dormitory rooms or homes, handcuffed, and driven in police cars to the simulated prison in the basement of the Psychology Dept. building on campus.

- The prisoners were fingerprinted and put blindfolded in a holding cell.

- The prisoners were humiliated: stripped naked, searched, "deloused," and issued a uniform (a dress or smock with no underwear). A chain was applied to their right leg and all were required to wear stocking caps (instead of having their heads shaved). Each prisoner was given an ID number.

- The guards were given no training, but given the authority to do whatever they thought needed to maintain order in the prison and respect by the prisoners. There was an undergraduate student who served as "warden."

- Prisoners were awakened at 2:30 am for a "count". "Rebellious" prisoners were made to do push-ups.

- Eventually prisoners rebelled. Guards were particularly harsh in treating the leader of the "rebellion" by denying him cigarettes and other things.

- The first "prisoner" began showing strong negative emotional reactions within 36 hours.

- By the end of five days, the experiment had to be ended. Some prisoners were clearly showing withdrawn behavior and some of the guards had begun to act sadistically.

- Social Roles: A social role is a widely shared expectation about how people in certain positions are supposed to behave.

- The Power of the Situation: By (re)creating such a different but realistic environment than that the students were used to, they were overwhelmed by these very differences and had little to rely upon to maintain their typical behavioral patterns.

HOWEVER, Zimbardo has been the subject of harsh criticism for conducting this so-called "experiment," but has argued that he never expected that the students' behaviors would so quickly and powerful deteriorate the way they did. His 2007 book, The Lucifer Effect, describes how powerful situations undermine the moral behaviors of otherwise good people.

Critics (e.g., Haslam et al., 2019; Perry, 2018; Le Texier, 2019) argue that the SPE was fundamentally flawed for a variety of reasons:

- There was no real hypothesis that was being tested.

- The SPE was less an "experiment" and more of a "theater piece" in which participants were following both explicit and implicit scripts about what they should be doing. The "guards" knew without being told explicitly that they needed to be tough on the "prisoners" although only 1/3 of the guards were ever actually sadistic in their behavior.

- Ethical concerns: The (unanticipated) abuse suffered by the "prisoners" at the hands of the "guards" would be considered wrong if done today and the experiment should have been stopped much sooner.

- Some participants who were interviewed more than 3 decades later claim that they were mostly play-acting to what they thought Zimbardo wanted them to do. Interviews with some "prison guards" by Le Texier (2019) claims that these guards were actually told how they should behave with the "prisoners," for example, that they needed to be "tough guards" with the prisoners. Zimbardo has denied that he told them how they needed to act.

- "For nearly a half century [the SPE] has been understood to show that assigning people to a toxic role will, on its own, unlock the human capacity to treat others with cruelty. In contrast, principles of identity leadership argue that roles are unlikely to elicit cruelty unless leaders encourage potential perpetrators to identify with what is presented as a noble in-group cause and to believe their actions are necessary for the advancement of that cause. … Through examination of material in the SPE archive, we present comprehensive evidence that, rather than Guards conforming to role of their own accord, Experimenters directly encouraged them to adopt roles and act tough in a manner consistent with tenets of identity leadership." (Haslam et al. 2019; boldface and italics added).

- To be fair to Zimbardo and his colleagues in the SPE, I note the recent research/analysis of Scott-Bottoms (2020) which argues that there were distinctive differences in how the guards reacted during the three work "shifts" they followed (Morning, 2 am-10 am; Day, 10 am-6 pm; Evening, 6 pm-2 am). It was simply not true that all the guards adopted very tough or, even, sadistic stands. And, indeed, all the participants knew that they were acting and, thus, were, of course, challenged to figure out how they should act given that they were being paid for their performance.

References

Elms, A. ( 2011, December 10). From CNN Blogs. Posted at The Situationist website. Retrieved Jan 11, 2012 from http://thesituationist.wordpress.com/category/classic-experiments>. Originally posted at <http://thechart.blogs.cnn.com/2011/12/09/my-summer-with-stanley-milgram/?hpt=he_c2>.

Further sources on the Milgram experiment can be found here:

- Burger, J. (2009). Replicating Milgram: Would people still obey today? American Psychologist, 64, 1-11.

- Haslam, S. A., Reicher, S. D., & Sutton, J. (2011). The shock of the old: Reconnecting with Milgram’s obedience studies, 50 years on. Special Issue of The Psychologist, 24(9).

- Haslam, S. A., & Reicher, S. D. (2007). Beyond the banality of evil: Three dynamics of an interactionist social psychology of tyranny. Personality and Social Psychology Bulletin, 33, 615-622

- Milgram, S. (1974). Obedience to authority: An experimental view. New York. NY: Harper & Row.

- Reicher, S. D., & Haslam, S. A. (2011). After shock? Towards a social identity explanation of the Milgram ‘obedience’ studies. British Journal of Social Psychology, 50, 163-169.

- Reicher, S. D., & Haslam, S. A., (2011). Culture of shock: A fresh look at Milgram’s obedience studies. Scientific American Mind, 22(6), 57-61.

The Stanford Prison Experiment (http://www.prisonexp.org) site maintained by Philip Zimbardo

Haslam, S. A., Reicher, S. D., & Van Bavel, J. J. (2019). Rethinking the nature of cruelty: The role of identity leadership in the Stanford Prison Experiment. American Psychologist, 74(7), 809-822. http://dx.doi.org/10.1037/amp0000443

Le Texier, T. (2019). Debunking the Stanford Prison Experiment. American Psychologist, 74(7), 823–839. https://doi.org/10.1037/amp0000401

Perry, G. (2018, October 13). The evil inside us all. New Scientist, pp. 38-40.

Scott-Bottoms, S. (2020). The dirty work of the Stanford Prison Experiment: Re-reading the dramaturgy of coercion. Incarceration, 1(1), 1-18

Zimbardo, P. (2007). The Lucifer effect: Understanding how good people turn evil. New York, NY: Random House.

This page was originally posted on 11/10/03